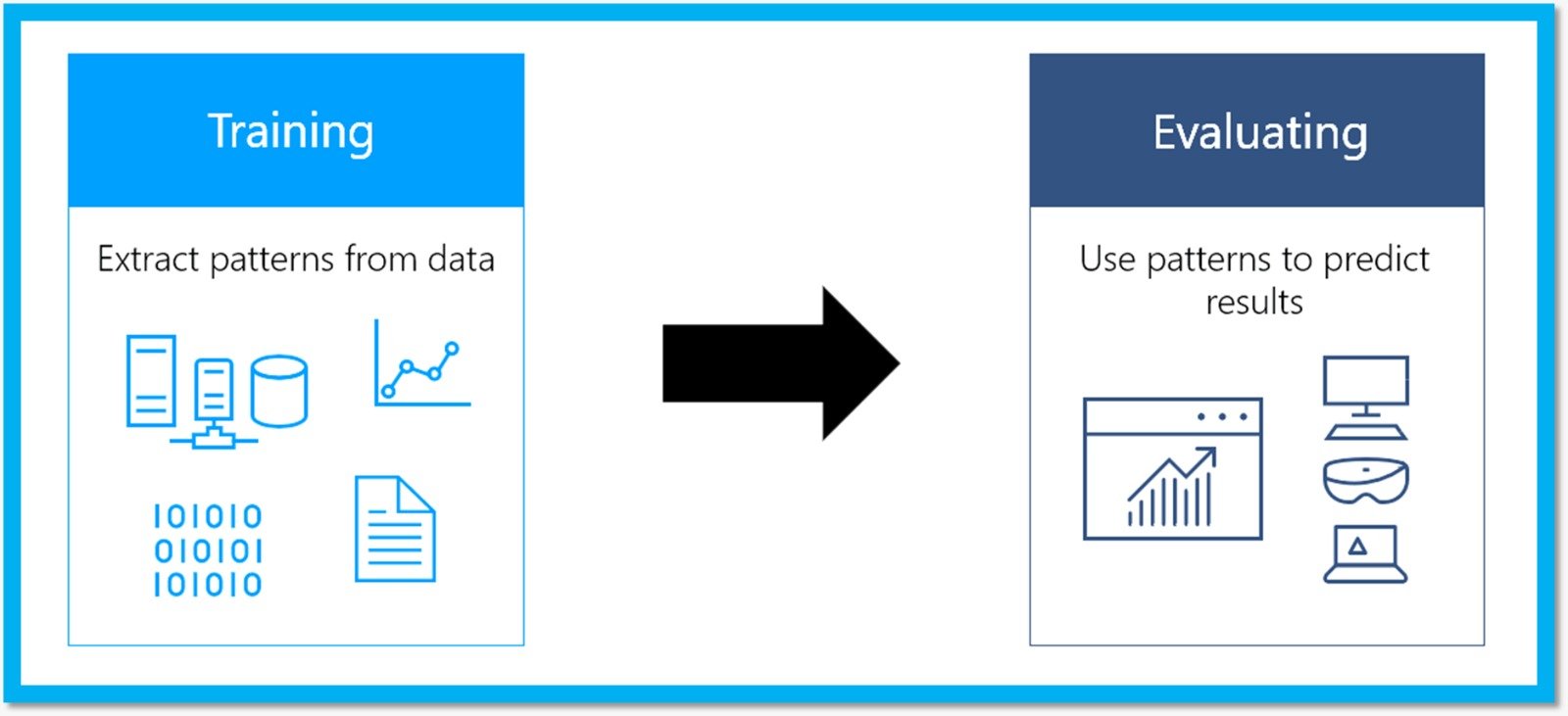

Machine learning models are algorithms or mathematical representations used to make predictions or decisions based on data. These models are trained on historical data to learn patterns and relationships, which they can then apply to new, unseen data to make predictions or classifications.

Here’s a list of common machine learning models along with their pros and cons:

- Linear Regression:

- Pros: Simple and interpretable, efficient training and inference, widely applicable for regression tasks.

- Cons: Assumes linear relationship between features and target variable, sensitive to outliers and multicollinearity.

- Logistic Regression:

- Pros: Efficient for binary classification, provides probabilities as output, interpretable coefficients.

- Cons: Assumes linear decision boundary, may underperform with complex relationships, sensitive to feature scaling.

- Decision Trees:

- Pros: Intuitive to understand and interpret, handles both numerical and categorical data, non-parametric.

- Cons: Prone to overfitting, sensitive to small variations in data, may create biased trees without pruning.

- Random Forest:

- Pros: Reduces overfitting compared to decision trees, handles high-dimensional data well, robust to noise and outliers.

- Cons: Less interpretable than decision trees, higher computational complexity and memory usage.

- Gradient Boosting Machines (GBM):

- Pros: Combines multiple weak learners to improve predictive performance, handles complex interactions between features.

- Cons: Prone to overfitting if not tuned properly, slower training compared to random forest, sensitive to noisy data.

- Support Vector Machines (SVM):

- Pros: Effective in high-dimensional spaces, versatile with different kernel functions, robust to overfitting with appropriate regularization.

- Cons: Memory-intensive for large datasets, computationally expensive during training, less interpretable with complex kernels.

- K-Nearest Neighbors (KNN):

- Pros: Simple and intuitive, non-parametric, does not require training phase, suitable for multi-class classification.

- Cons: Computationally expensive during inference, sensitive to irrelevant or redundant features, requires careful feature scaling.

- Neural Networks:

- Pros: Capable of learning complex patterns and representations, scalable to large datasets, state-of-the-art performance in various domains.

- Cons: Requires large amounts of data for training, computationally intensive, prone to overfitting without regularization, black-box nature.

- Naive Bayes:

- Pros: Simple and fast, efficient with high-dimensional data, handles categorical features well, robust to irrelevant features.

- Cons: Assumes independence between features, may not capture complex relationships in the data, sensitive to imbalanced classes.

- Clustering Algorithms (e.g., K-Means, DBSCAN):

- Pros: Identifies natural groupings in data, useful for exploratory analysis and anomaly detection.

- Cons: Requires specifying the number of clusters (K), sensitive to initialization, may not perform well with non-linear or irregularly shaped clusters.

Each machine learning model has its own strengths and weaknesses, and the choice of model depends on the specific characteristics of the dataset, the nature of the problem, and the desired trade-offs between interpretability, accuracy, and computational efficiency.